Reading time:

The AI3: The 3 Metrics to Measure in AI Era of Software Engineering

Article written by

Andrew Churchill

The AI3: The 3 Metrics to Measure in AI Era of Software Engineering

Remember DORA metrics? Deploy frequency, lead time, change failure rate, and recovery time. Clean. Simple. Everyone knew what they meant.

Then AI broke everything.

Your deploy frequency looks great, but half your code is getting rewritten within two weeks. Lead time is down, but you're burning $5K per engineer per month on AI tools and nobody knows if it's worth it.

The old playbook doesn't work anymore. You need new metrics for a new era.

This is why AI3 exists.

What is the AI3?

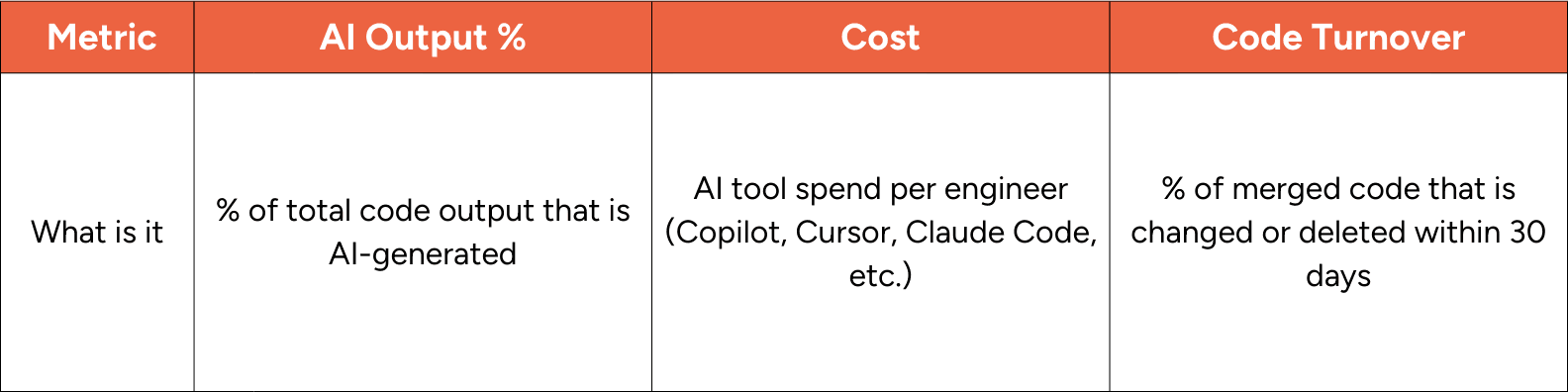

Three metrics to understand the effectiveness of AI in your team:

1. AI Output % – How much of your code output is AI-generated

2. Cost – What you're spending on AI tools per engineer

3. Turnover – How much code gets deleted or rewritten within 30 days

These three numbers tell you everything you need to know about whether AI is making your team more effective.

Here’s how:

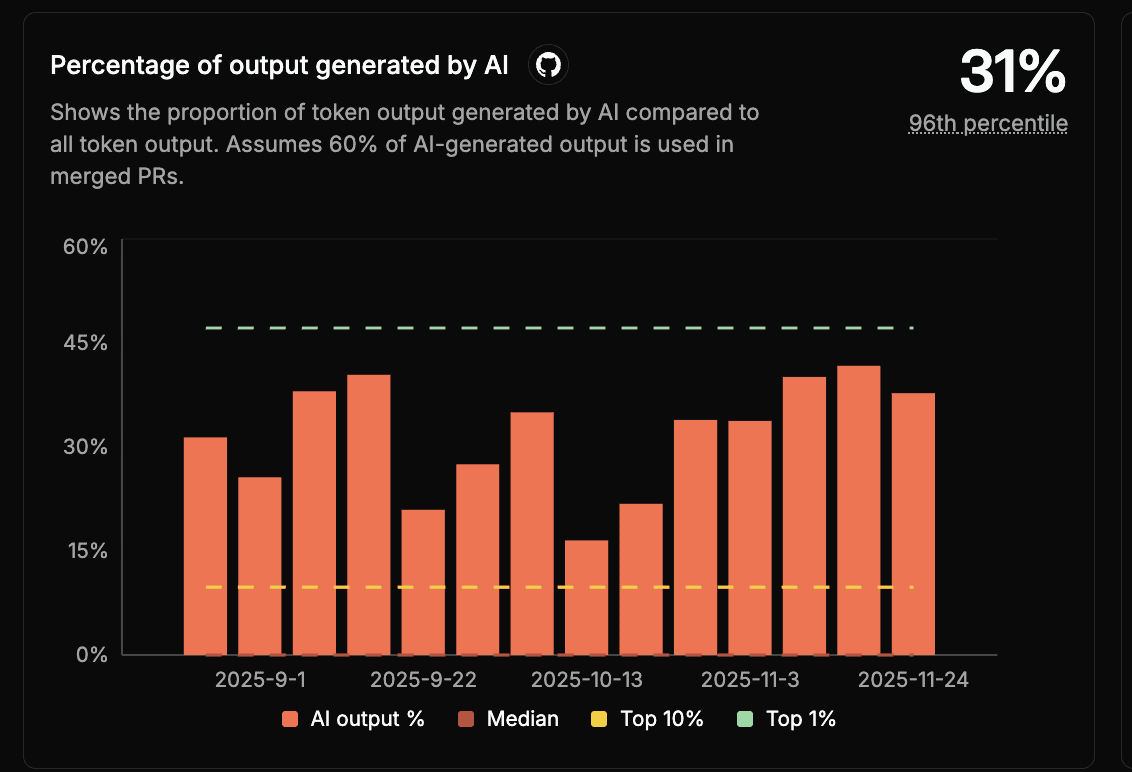

1. AI Output %

This is the foundation. If you're not measuring what percentage of your code output is from AI then, you're flying blind.

Code output is a measure of engineering work that combines ML and domain-specific LLMs to understand engineering work. Rather than Lines of Code, or # of PRs, code output asks"How long would it take an expert engineer to make this change?” and uses ML, AI and RL to answer it.

Traditional metrics like lines of code, # of PRs were bad before AI and significantly worse now. That’s why Code output exists, to provide a useful measure.

Most engineering leaders guess this number. "Probably around 30%," they say. Then they actually measure it and discover it's 12%. Or 58%. The gap between perception and reality is where decisions get made.

But here's what makes this metric powerful: it's not just the overall percentage. It's where AI is being used.

AI writes 65% of your test code but 8% of core logic? That's a pattern.

Junior engineers at 3x AI usage compared to seniors? That's another pattern.

AI code concentrated in certain repos or teams? That's information.

The goal isn't to maximize this number. The goal is to know it, understand it, and make informed decisions based on it.

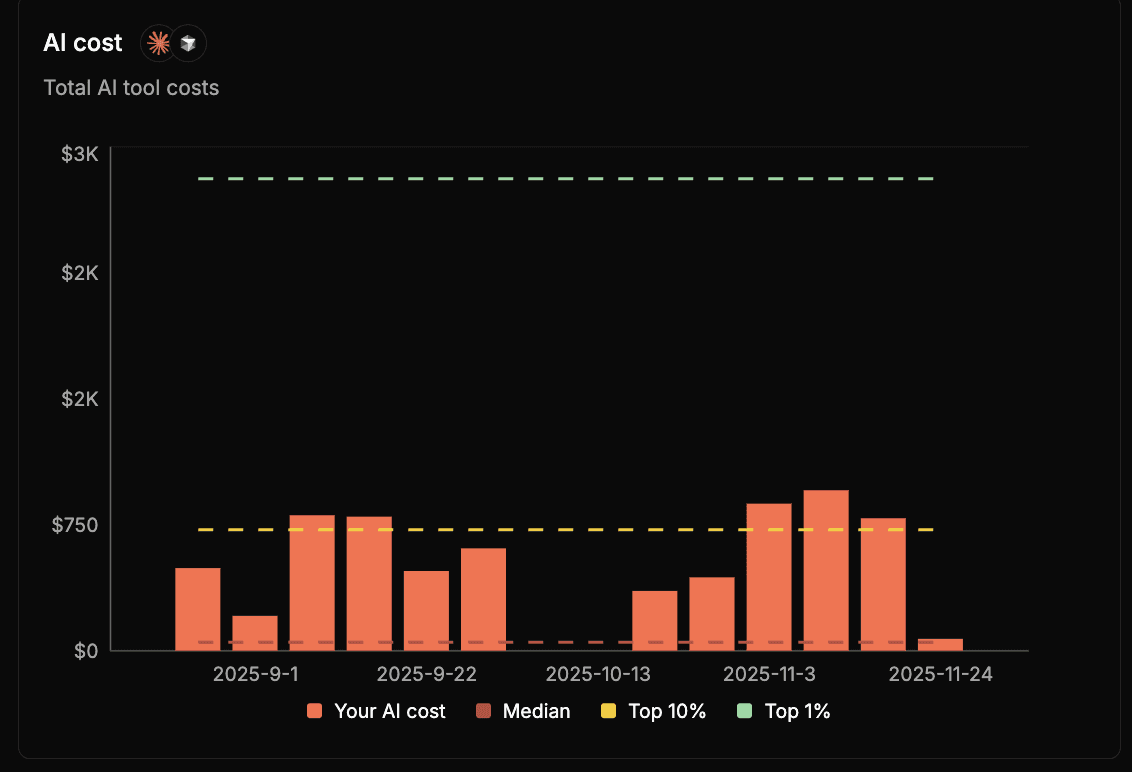

2. Cost

Unlike traditional SaaS where costs are predictable, AI tool costs scale with usage in ways that surprise finance teams every quarter.

Teams are spending thousands of dollars each month on AI tools for engineers. Some are aware of it, and some are not.

The real insight comes when you connect cost to output. Spending $4,000/engineer/year with 15% AI output? That's a very different story than $4,000 with 45% output. Neither is automatically good or bad, but you need to know which world you're living in.

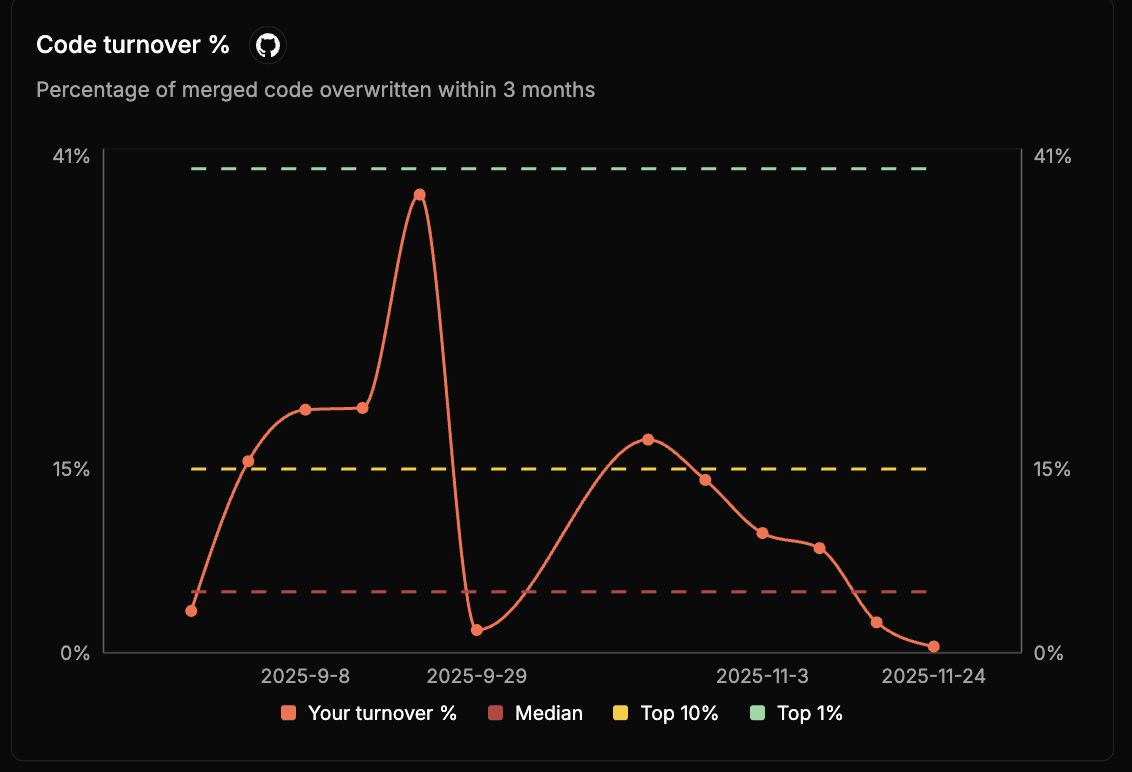

3. Turnover

This is the metric nobody's tracking yet, and it's becoming the most important one.

Code turnover is simple: how much code gets written in the following 3 months of it being merged.

AI makes it incredibly easy to write code. That's the point. But it also makes it incredibly easy to write code that doesn't stick - code that solves the wrong problem, creates unexpected complexity, or just isn't the right approach.

High turnover isn't automatically bad. Sometimes you need to explore. But if your turnover has doubled since adopting AI and you haven't noticed, you might be mistaking activity for progress. Your velocity looks amazing, but you're running on a treadmill.

The magic happens when you segment turnover by AI vs. human-written code:

AI-generated tests with low turnover? Great, they're sticking.

AI-generated API endpoints with 3x the turnover of human-written ones? Worth investigating.

Why These Three Work

There are dozens of metrics you could track. But the AI3 give you something specific: they tell you if AI is actually making your team more effective.

AI Output % tells you if adoption is real

Cost tells you if the economics make sense

Turnover tells you if the output is quality

Together, they form a feedback loop:

High AI output + high turnover + high cost? You're paying a lot for code that doesn't stick.

High AI output + low turnover + reasonable cost? You've found a winning use case.

Low AI output + high cost? Time to revisit your tooling strategy.

The New Benchmark

Just like DORA gave us "Elite" performers to aspire to, using the AI3 in Weave gives you clear targets to the top 10% and median across all teams.

Start Measuring Today

The metrics that mattered in 2020 aren't enough for 2025. DORA metrics don't capture the AI layer, and that layer is now 30-50% of what your team does.

You don't need a complex implementation. Start simple:

Measure your AI output percentage this week

Add up your actual AI tool costs per engineer

Track turnover for the next 30 days

This is the foundation. If you're not measuring what percentage of your codebase AI is writing, you're flying blind.

Article written by

Andrew Churchill

Make AI Engineering Simple

Effortless charts, clear scope, easy code review, and team analysis