Reading time:

How to Accurately Measure AI Usage in Engineering Teams

Article written by

Brennan Lupyrypa

As an engineering leader, you've likely invested in AI tools, but can you prove they're actually working? It's a common problem. Many teams see high adoption rates for AI coding assistants, but the results and developer satisfaction are mixed. This creates a frustrating blind spot, leaving you to wonder if the investment is paying off. You might know some AI usage metrics every engineering manager should track, but without the right data, you're just guessing about productivity boosts.

The core challenge is telling the difference between developers simply trying out AI tools and those meaningfully integrating them into their workflows. This article provides a practical framework to help you accurately measure AI usage and understand its true impact on your team's performance.

The Challenge: Why Traditional Metrics Fail in the AI Era

For years, we've tried to measure engineering productivity with metrics like lines of code, pull request counts, or story points. But these have always been poor proxies for actual output, and they're even less useful in the age of AI-assisted development.

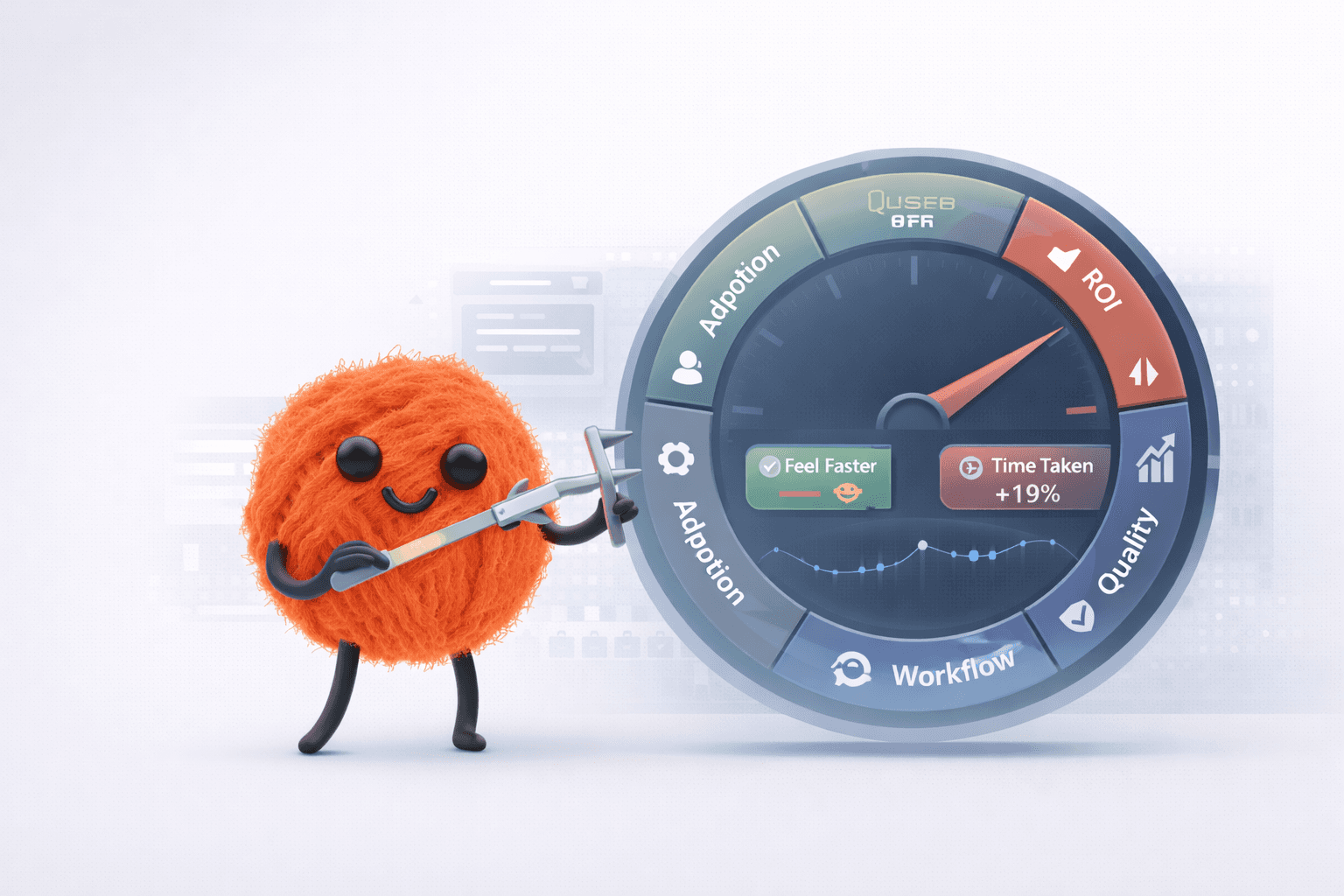

Frameworks like DORA and SPACE are still valuable, but they need to be adapted for AI-driven workflows to provide a complete picture [3]. The disconnect between perceived and actual productivity gains is a major source of confusion. For example, one recent study from mid-2025 found that experienced developers using new AI tools actually took 19% longer to complete tasks, even though they felt they were faster [6]. This confusion highlights why better measurement is critical as AI becomes more integrated into our work.

A Modern Framework for Measuring AI Usage and Impact

To get a clear picture, you need to move beyond simple activity tracking. A modern measurement framework should be multi-faceted, looking at adoption, workflow integration, quality, and business impact. This approach helps you see everything from initial engagement to bottom-line results, so you can truly track AI tool adoption.

1. Usage and Engagement Metrics

These metrics answer the question: Who is using AI, and how often?

Adoption Rate: The percentage of team members who have used an AI tool.

Active User Rate: The number of engineers using AI tools daily or weekly, which signals habitual use.

Engagement Depth: Are users exploring advanced features or just sticking to basic code completion?

2. Productivity and Workflow Metrics

Is AI actually making the team faster and more efficient?

Cycle Time: Measure the time from first commit to deployment to see if AI is shortening development cycles [1].

Weave Output Score: Our unique metric at Weave calculates the time it would take an expert engineer to complete the work in a PR, giving you an objective measure of output.

PR Review Cycle Time: A recent study found AI tools can reduce PR review time by 31.8% [5]. Tracking this can show direct efficiency gains.

AI-Powered Workflows: Count how many regular tasks, like generating unit tests or writing PR descriptions, now consistently involve AI.

3. Quality and Outcome Metrics

Is the AI-assisted work better, or just faster?

Code Turnover: How much AI-generated code is rewritten or deleted shortly after being committed? High turnover can indicate low-quality or irrelevant suggestions.

Change Failure Rate: This key DORA metric tracks if deployments with AI-assisted code lead to more production failures [2].

Bug Detection and Resolution Rates: Does AI help find and fix bugs faster, or does it introduce new, subtle ones?

Code Review Quality: Use AI-driven tools to assess if code reviews are becoming more or less thorough with the help of AI summaries.

4. Business and ROI Metrics

What's the bottom-line impact of your AI investment?

Return on Investment (ROI): Compare the cost of AI tools to the value created through developer time savings and increased output.

Development Cost per Feature: Track if the cost to deliver new features decreases after widespread AI adoption.

Innovation vs. Maintenance: You can measure internal AI usage to see if it shifts engineering time from bug fixes toward building new, innovative features.

How Weave Provides Accurate AI Measurement

This is where Weave comes in. We built our engineering analytics platform for the AI era to provide clarity where traditional tools can't. Weave uses AI to measure AI, analyzing every pull request and code review to understand the complexity and quality of the work, not just the volume of activity.

Our platform offers several key features for AI measurement:

AI Adoption Dashboards: See exactly which individuals and teams are using which AI tools and how effectively.

AI ROI Calculation: Automatically track time saved and productivity gains to clearly justify your tool investments.

Code Quality Analysis: Measure the true quality of AI-generated code and its long-term impact on your codebase.

Deep Research Agent: Ask complex questions about your team’s productivity and bottlenecks and get AI-powered answers, which can address many of the most frequently asked questions by engineering managers).

A Practical Guide to Implementing Your Measurement Strategy

Ready to get started? Here’s a simple, actionable plan.

Step 1: Define Goals and Establish a Baseline

First, clarify your objectives. Are you aiming for faster delivery, higher code quality, or an improved developer experience? Before you roll out new tools, it’s crucial to unlock the potential of AI by measuring its adoption and impact from a known starting point. Use a platform like Weave to collect baseline data on your current cycle times, output, and code quality. This is essential for accurate before-and-after comparisons.

Step 2: Choose the Right Tools and Metrics

Don't try to track everything at once. Select a few key metrics from each category above that align directly with your goals [4]. Implement an engineering analytics platform like Weave that integrates with your existing tools (like GitHub and your AI assistants) to automate data collection without disrupting developer workflows.

Step 3: Analyze, Iterate, and Optimize

Use your new insights to drive action. A guide to AI-driven engineering analytics can help you use dashboards to identify high-performing teams and individuals and share their best practices. You can also pinpoint teams that might need more training or support with AI tools. Most importantly, you can use concrete data to have productive, fact-based conversations in stand-ups, retrospectives, and budget meetings.

Conclusion: Move from Guesswork to a Data-Driven AI Strategy

Accurately measuring AI usage is no longer optional for high-performing engineering organizations. To do it right, you need a holistic framework that focuses on usage, productivity, quality, and business impact.

Teams that master this will optimize their investments, boost productivity, and gain a significant competitive advantage. The future of engineering analytics is about understanding outcomes, not just activity.

Stop guessing and start measuring. See how top engineering teams are quantifying AI adoption and get started with Weave today to turn your data into decisive action.

Meta Description

Accurately measure AI usage in your engineering team with a framework for tracking adoption, productivity, quality, and true business impact.

Citations

[1] https://monday.com/blog/rnd/engineering-productivity

[2] https://gainmomentum.ai/blog/engineering-productivity-metrics

[4] https://faros.ai/blog/best-engineering-productivity-metrics-for-modern-operating-models

[5] https://arxiv.org/abs/2509.19708

[6] https://metr.org/blog/2025-07-10-early-2025-ai-experienced-os-dev-study

Article written by

Brennan Lupyrypa

Make AI Engineering Simple

Effortless charts, clear scope, easy code review, and team analysis